Keras is a Python library for deep learning that wraps the powerful numerical libraries Theano and TensorFlow.

A difficult problem where traditional neural networks fall down is called object recognition. It is where a model is able to identify the objects in images.

In this post you will discover how to develop and evaluate deep learning models for object recognition in Keras. After completing this tutorial you will know:

- About the CIFAR-10 object recognition dataset and how to load and use it in Keras.

- How to create a simple Convolutional Neural Network for object recognition.

- How to lift performance by creating deeper Convolutional Neural Networks.

Let’s get started.

The CIFAR-10 Problem Description

The problem of automatically identifying objects in photographs is difficult because of the near infinite number of permutations of objects, positions, lighting and so on. It’s a really hard problem.

This is a well studied problem in computer vision and more recently an important demonstration of the capability of deep learning. A standard computer vision and deep learning dataset for this problem was developed by the Canadian Institute for Advanced Research (CIFAR).

The CIFAR-10 dataset consists of 60,000 photos divided into 10 classes (hence the name CIFAR-10). Classes include common objects such as airplanes, automobiles, birds, cats and so on. The dataset is split in a standard way, where 50,000 images are used for training a model and the remaining 10,000 for evaluating its performance.

The photos are in color with red, green and blue components, but are small measuring 32 by 32 pixel squares.

State of the art results are achieved using very large Convolutional Neural networks. You can learn about state of the are results on CIFAR-10 on Rodrigo Benenson’s webpage. Model performance is reported in classification accuracy, with very good performance above 90% with human performance on the problem at 94% and state-of-the-art results at 96% at the time of writing.

There is a Kaggle competition that makes use of the CIFAR-10 dataset. It is a good place to join the discussion of developing new models for the problem and picking up models and scripts as a starting point.

Get Started in Deep Learning With Python

Deep Learning gets state-of-the-art results and Python hosts the most powerful tools.

Get started now!

PDF Download and Email Course.

FREE 14-Day Mini-Course on

Deep Learning With Python

Download your PDF containing all 14 lessons.

Get your daily lesson via email with tips and tricks.

Loading The CIFAR-10 Dataset in Keras

The CIFAR-10 dataset can easily be loaded in Keras.

Keras has the facility to automatically download standard datasets like CIFAR-10 and store them in the ~/.keras/datasets directory using the cifar10.load_data() function. This dataset is large at 163 megabytes, so it may take a few minutes to download.

Once downloaded, subsequent calls to the function will load the dataset ready for use.

The dataset is stored as pickled training and test sets, ready for use in Keras. Each image is represented as a three dimensional matrix, with dimensions for red, green, blue, width and height. We can plot images directly using matplotlib.

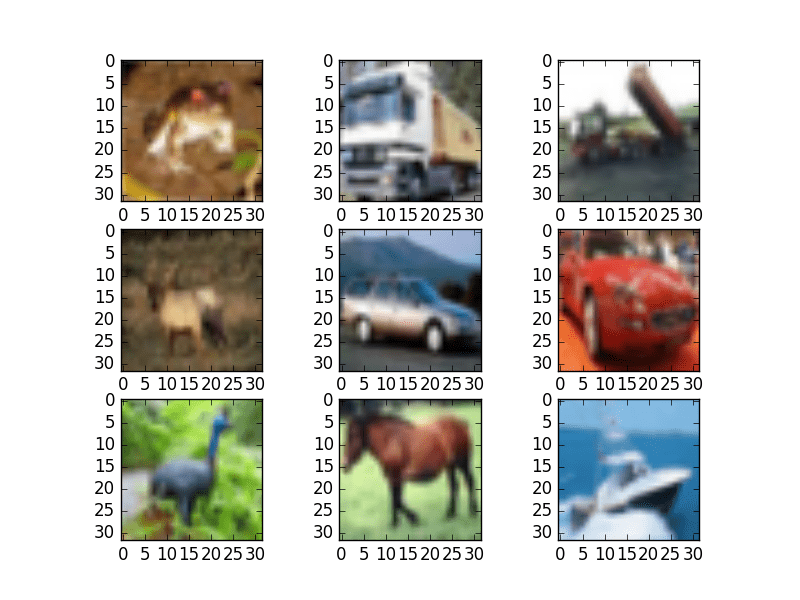

# Plot ad hoc CIFAR10 instances from keras.datasets import cifar10 from matplotlib import pyplot from scipy.misc import toimage # load data (X_train, y_train), (X_test, y_test) = cifar10.load_data() # create a grid of 3x3 images for i in range(0, 9): pyplot.subplot(330 + 1 + i) pyplot.imshow(toimage(X_train[i])) # show the plot pyplot.show()

Running the code create a 3×3 plot of photographs. The images have been scaled up from their small 32×32 size, but you can clearly see trucks horses and cars. You can also see some distortion in some images that have been forced to the square aspect ratio.

Small Sample of CIFAR-10 Images

Simple Convolutional Neural Network for CIFAR-10

The CIFAR-10 problem is best solved using a Convolutional Neural Network (CNN).

We can quickly start off by defining all of the classes and functions we will need in this example.

# Simple CNN model for CIFAR-10 import numpy from keras.datasets import cifar10 from keras.models import Sequential from keras.layers import Dense from keras.layers import Dropout from keras.layers import Flatten from keras.constraints import maxnorm from keras.optimizers import SGD from keras.layers.convolutional import Convolution2D from keras.layers.convolutional import MaxPooling2D from keras.utils import np_utils

As is good practice, we next initialize the random number seed with a constant to ensure the results are reproducible.

# fix random seed for reproducibility seed = 7 numpy.random.seed(seed)

Next we can load the CIFAR-10 dataset.

# load data (X_train, y_train), (X_test, y_test) = cifar10.load_data()

The pixel values are in the range of 0 to 255 for each of the red, green and blue channels.

It is good practice to work with normalized data. Because the input values are well understood, we can easily normalize to the range 0 to 1 by dividing each value by the maximum observation which is 255.

Note, the data is loaded as integers, so we must cast it to floating point values in order to perform the division.

# normalize inputs from 0-255 to 0.0-1.0

X_train = X_train.astype('float32')

X_test = X_test.astype('float32')

X_train = X_train / 255.0

X_test = X_test / 255.0The output variables are defined as a vector of integers from 0 to 1 for each class.

We can use a one hot encoding to transform them into a binary matrix in order to best model the classification problem. We know there are 10 classes for this problem, so we can expect the binary matrix to have a width of 10.

# one hot encode outputs y_train = np_utils.to_categorical(y_train) y_test = np_utils.to_categorical(y_test) num_classes = y_test.shape[1]

Let’s start off by defining a simple CNN structure as a baseline and evaluate how well it performs on the problem.

We will use a structure with two convolutional layers followed by max pooling and a flattening out of the network to fully connected layers to make predictions.

Our baseline network structure can be summarized as follows:

- Convolutional input layer, 32 feature maps with a size of 3×3, a rectifier activation function and a weight constraint of max norm set to 3.

- Dropout set to 20%.

- Convolutional layer, 32 feature maps with a size of 3×3, a rectifier activation function and a weight constraint of max norm set to 3.

- Max Pool layer with size 2×2.

- Flatten layer.

- Fully connected layer with 512 units and a rectifier activation function.

- Dropout set to 50%.

- Fully connected output layer with 10 units and a softmax activation function.

A logarithmic loss function is used with the stochastic gradient descent optimization algorithm configured with a large momentum and weight decay start with a learning rate of 0.01.

# Create the model model = Sequential() model.add(Convolution2D(32, 3, 3, input_shape=(3, 32, 32), border_mode='same', activation='relu', W_constraint=maxnorm(3))) model.add(Dropout(0.2)) model.add(Convolution2D(32, 3, 3, activation='relu', border_mode='same', W_constraint=maxnorm(3))) model.add(MaxPooling2D(pool_size=(2, 2))) model.add(Flatten()) model.add(Dense(512, activation='relu', W_constraint=maxnorm(3))) model.add(Dropout(0.5)) model.add(Dense(num_classes, activation='softmax')) # Compile model epochs = 25 lrate = 0.01 decay = lrate/epochs sgd = SGD(lr=lrate, momentum=0.9, decay=decay, nesterov=False) model.compile(loss='categorical_crossentropy', optimizer=sgd, metrics=['accuracy']) print(model.summary())

We can fit this model with 25 epochs and a batch size of 32.

A small number of epochs was chosen to help keep this tutorial moving. Normally the number of epochs would be one or two orders of magnitude larger for this problem.

Once the model is fit, we evaluate it on the test dataset and print out the classification accuracy.

# Fit the model

model.fit(X_train, y_train, validation_data=(X_test, y_test), nb_epoch=epochs, batch_size=32)

# Final evaluation of the model

scores = model.evaluate(X_test, y_test, verbose=0)

print("Accuracy: %.2f%%" % (scores[1]*100))Running this example provides the results below. First the network structure is summarized which confirms our design was implemented correctly.

# ____________________________________________________________________________________________________ # Layer (type) Output Shape Param # Connected to # ==================================================================================================== # convolution2d_1 (Convolution2D) (None, 32, 32, 32) 896 convolution2d_input_1[0][0] # ____________________________________________________________________________________________________ # dropout_1 (Dropout) (None, 32, 32, 32) 0 convolution2d_1[0][0] # ____________________________________________________________________________________________________ # convolution2d_2 (Convolution2D) (None, 32, 32, 32) 9248 dropout_1[0][0] # ____________________________________________________________________________________________________ # maxpooling2d_1 (MaxPooling2D) (None, 32, 16, 16) 0 convolution2d_2[0][0] # ____________________________________________________________________________________________________ # flatten_1 (Flatten) (None, 8192) 0 maxpooling2d_1[0][0] # ____________________________________________________________________________________________________ # dense_1 (Dense) (None, 512) 4194816 flatten_1[0][0] # ____________________________________________________________________________________________________ # dropout_2 (Dropout) (None, 512) 0 dense_1[0][0] # ____________________________________________________________________________________________________ # dense_2 (Dense) (None, 10) 5130 dropout_2[0][0] # ==================================================================================================== # Total params: 4210090 # ____________________________________________________________________________________________________

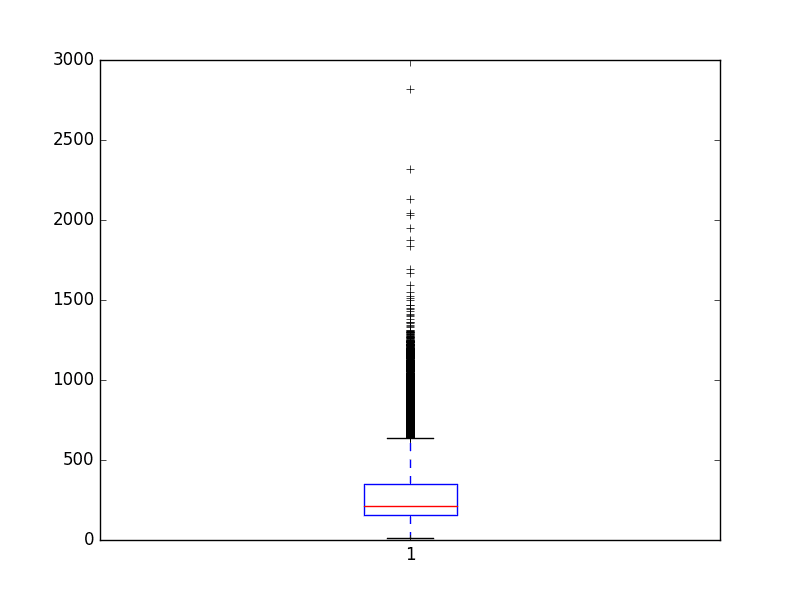

The classification accuracy and loss is printed each epoch on both the training and test datasets. The model is evaluated on the test set and achieves an accuracy of 71.82%, which is not excellent.

# 50000/50000 [==============================] - 24s - loss: 0.2515 - acc: 0.9116 - val_loss: 1.0101 - val_acc: 0.7131 # Epoch 21/25 # 50000/50000 [==============================] - 24s - loss: 0.2345 - acc: 0.9203 - val_loss: 1.0214 - val_acc: 0.7194 # Epoch 22/25 # 50000/50000 [==============================] - 24s - loss: 0.2215 - acc: 0.9234 - val_loss: 1.0112 - val_acc: 0.7173 # Epoch 23/25 # 50000/50000 [==============================] - 24s - loss: 0.2107 - acc: 0.9269 - val_loss: 1.0261 - val_acc: 0.7151 # Epoch 24/25 # 50000/50000 [==============================] - 24s - loss: 0.1986 - acc: 0.9322 - val_loss: 1.0462 - val_acc: 0.7170 # Epoch 25/25 # 50000/50000 [==============================] - 24s - loss: 0.1899 - acc: 0.9354 - val_loss: 1.0492 - val_acc: 0.7182 Accuracy: 71.82%

We can improve the accuracy significantly by creating a much deeper network. This is what we will look at in the next section.

Larger Convolutional Neural Network for CIFAR-10

We have seen that a simple CNN performs poorly on this complex problem. In this section we look at scaling up the size and complexity of our model.

Let’s design a deep version of the simple CNN above. We can introduce an additional round of convolutions with many more feature maps. We will use the same pattern of Convolutional, Dropout, Convolutional and Max Pooling layers.

This pattern will be repeated 3 times with 32, 64, and 128 feature maps. The effect be an increasing number of feature maps with a smaller and smaller size given the max pooling layers. Finally an additional and larger Dense layer will be used at the output end of the network in an attempt to better translate the large number feature maps to class values.

We can summarize a new network architecture as follows:

- Convolutional input layer, 32 feature maps with a size of 3×3 and a rectifier activation function.

- Dropout layer at 20%.

- Convolutional layer, 32 feature maps with a size of 3×3 and a rectifier activation function.

- Max Pool layer with size 2×2.

- Convolutional layer, 64 feature maps with a size of 3×3 and a rectifier activation function.

- Dropout layer at 20%.

- Convolutional layer, 64 feature maps with a size of 3×3 and a rectifier activation function.

- Max Pool layer with size 2×2.

- Convolutional layer, 128 feature maps with a size of 3×3 and a rectifier activation function.

- Dropout layer at 20%.

- Convolutional layer,128 feature maps with a size of 3×3 and a rectifier activation function.

- Max Pool layer with size 2×2.

- Flatten layer.

- Dropout layer at 20%.

- Fully connected layer with 1024 units and a rectifier activation function.

- Dropout layer at 20%.

- Fully connected layer with 512 units and a rectifier activation function.

- Dropout layer at 20%.

- Fully connected output layer with 10 units and a softmax activation function.

We can very easily define this network topology in Keras, as follows:

# Create the model model = Sequential() model.add(Convolution2D(32, 3, 3, input_shape=(3, 32, 32), activation='relu', border_mode='same')) model.add(Dropout(0.2)) model.add(Convolution2D(32, 3, 3, activation='relu', border_mode='same')) model.add(MaxPooling2D(pool_size=(2, 2))) model.add(Convolution2D(64, 3, 3, activation='relu', border_mode='same')) model.add(Dropout(0.2)) model.add(Convolution2D(64, 3, 3, activation='relu', border_mode='same')) model.add(MaxPooling2D(pool_size=(2, 2))) model.add(Convolution2D(128, 3, 3, activation='relu', border_mode='same')) model.add(Dropout(0.2)) model.add(Convolution2D(128, 3, 3, activation='relu', border_mode='same')) model.add(MaxPooling2D(pool_size=(2, 2))) model.add(Flatten()) model.add(Dropout(0.2)) model.add(Dense(1024, activation='relu', W_constraint=maxnorm(3))) model.add(Dropout(0.2)) model.add(Dense(512, activation='relu', W_constraint=maxnorm(3))) model.add(Dropout(0.2)) model.add(Dense(num_classes, activation='softmax')) # Compile model epochs = 25 lrate = 0.01 decay = lrate/epochs sgd = SGD(lr=lrate, momentum=0.9, decay=decay, nesterov=False) model.compile(loss='categorical_crossentropy', optimizer=sgd, metrics=['accuracy']) print(model.summary())

We can fit and evaluate this model using the same a procedure above and the same number of epochs but a larger batch size of 64, found through some minor experimentation.

numpy.random.seed(seed)

model.fit(X_train, y_train, validation_data=(X_test, y_test), nb_epoch=epochs, batch_size=64)

# Final evaluation of the model

scores = model.evaluate(X_test, y_test, verbose=0)

print("Accuracy: %.2f%%" % (scores[1]*100))Running this example prints the classification accuracy and loss on the training and test datasets each epoch. The estimate of classification accuracy for the final model is 80.18% which is nearly 10 points better than our simpler model.

# 50000/50000 [==============================] - 34s - loss: 0.4993 - acc: 0.8230 - val_loss: 0.5994 - val_acc: 0.7932 # Epoch 20/25 # 50000/50000 [==============================] - 34s - loss: 0.4877 - acc: 0.8271 - val_loss: 0.5986 - val_acc: 0.7932 # Epoch 21/25 # 50000/50000 [==============================] - 34s - loss: 0.4714 - acc: 0.8327 - val_loss: 0.5916 - val_acc: 0.7959 # Epoch 22/25 # 50000/50000 [==============================] - 34s - loss: 0.4603 - acc: 0.8376 - val_loss: 0.5954 - val_acc: 0.8003 # Epoch 23/25 # 50000/50000 [==============================] - 34s - loss: 0.4454 - acc: 0.8410 - val_loss: 0.5742 - val_acc: 0.8024 # Epoch 24/25 # 50000/50000 [==============================] - 34s - loss: 0.4332 - acc: 0.8468 - val_loss: 0.5829 - val_acc: 0.8027 # Epoch 25/25 # 50000/50000 [==============================] - 34s - loss: 0.4217 - acc: 0.8498 - val_loss: 0.5785 - val_acc: 0.8018 # Accuracy: 80.18%

Extensions To Improve Model Performance

We have achieved good results on this very difficult problem, but we are still a good way from achieving world class results.

Below are some ideas that you can try to extend upon the models and improve model performance.

- Train for More Epochs. Each model was trained for a very small number of epochs, 25. It is common to train large convolutional neural networks for hundreds or thousands of epochs. I would expect that performance gains can be achieved by significantly raising the number of training epochs.

- Image Data Augmentation. The objects in the image vary in their position. Another boost in model performance can likely be achieved by using some data augmentation. Methods such as standardization and random shifts and horizontal image flips may be beneficial.

- Deeper Network Topology. The larger network presented is deep, but larger networks could be designed for the problem. This may involve more feature maps closer to the input and perhaps less aggressive pooling. Additionally, standard convolutional network topologies that have been shown useful may be adopted and evaluated on the problem.

Summary

In this post you discovered how to create deep learning models in Keras for object recognition in photographs.

After working through this tutorial you learned:

- About the CIFAR-10 dataset and how to load it in Keras and plot ad hoc examples from the dataset.

- How to train and evaluate a simple Convolutional Neural Network on the problem.

- How to expand a simple Convolutional Neural Network into a deep Convolutional Neural Network in order to boost performance on the difficult problem.

- How to use data augmentation to get a further boost on the difficult object recognition problem.

Do you have any questions about object recognition or about this post? Ask your question in the comments and I will do my best to answer.

Do You Want To Get Started With Deep Learning?

You can develop and evaluate deep learning models in just a few lines of Python code. You need:

Take the next step with 14 self-study tutorials and

7 end-to-end projects.

Covers multi-layer perceptrons, convolutional neural networks, objection recognition and more.

Ideal for machine learning practitioners already familiar with the Python ecosystem.

Bring Deep Learning To Your Machine Learning Projects

The post Object Recognition with Convolutional Neural Networks in the Keras Deep Learning Library appeared first on Machine Learning Mastery.

XGBoost is the high performance implementation of gradient boosting that you can now access directly in Python.

XGBoost is the high performance implementation of gradient boosting that you can now access directly in Python.

Python and scikit-learn are the rising platform among professional data scientists for applied machine learning.

Python and scikit-learn are the rising platform among professional data scientists for applied machine learning.